Observability strategies to not overload engineering teams — OpenTelemetry Strategy.

- Nicolas takashi

- Observability , Infrastructure

- November 15, 2022

Table of Contents

OpenTelemetry provides capabilities to democratize observability data and empowers engineering teams.

One of the strategies I mentioned in my first post, Observability strategies to not overload engineering teams, is leveraging the OpenTelemetry auto instrumentation approach, to help us achieve observability without requesting engineering efforts.

Today, I’ll show you how to collect metrics and traces from a python service without code changes.

Info

To keep the content cleaner, I will leave some settings like the Prometheus configuration file.

What is OpenTelemetry Auto Instrumentation

OpenTelemetry provides an auto-instrumentation feature, that aims to act as an agent collecting telemetry data from a non-instrumented application.

This is what most of the O11y vendors such as New Relic and Data Dog does to collect telemetry data and push it to their platforms, this is a valuable feature because engineering teams can achieve observability with zero instrumentation effort.

Demo application

To help us achieve what we need to understand how the auto instrument works, I’ve created the following simple python service.

from flask import Flask

app = Flask(__name__)

@app.route("/status")

def server_request():

return "Ok"

if __name__ == "__main__":

app.run("0.0.0.0", port=9090)

}

Docker Image

This Docker image has all the required dependencies including OpenTelemetry auto instrumentation packages.

# syntax=docker/dockerfile:1

FROM python:3.8

WORKDIR /app

RUN pip3 install opentelemetry-distro opentelemetry-exporter-otlp flask

RUN opentelemetry-bootstrap --action=install

COPY ./app/server.py .

CMD opentelemetry-instrument \

python \

server.py

Before we move forward, I would like to highlight the cmd entry, where we’re decorating the python server.py command with the opentelemetry-instrument command, this is what is going to do the auto instrumentation work, we don’t need to change anything else on the application side to collect telemetry data.

Service Infrastructure

Using the following configuration, let’s build the docker image we created above.

The opentelemetry-instrument accepts a couple of flags or environment variables, that allow you to configure protocols and also properties, for more information about the available environment variables, please check this link.

version: '3.8'

services:

server:

build:

context: .

dockerfile: ./app/Dockerfile

environment:

- OTEL_TRACES_EXPORTER=otlp

- OTEL_SERVICE_NAME=server

- OTEL_EXPORTER_OTLP_ENDPOINT=http://otel:4317

ports:

- 9091:9090

Above, we’re configuring the traces exporter to use the otel format, setting the service name that will be present on the traces as server , and providing the opentelemetry-collector endpoint.

Observability Infrastructure

Now let’s add the building blocks to provide the observability tooling infrastructure.

OpenTelemetry Collector

The Opentelemetry collector is the component that will receive, process, and also export the telemetry data produced by the python application to the backends such as Prometheus and Jaeger.

OpenTelemetry Collector Configuration

The configuration below will receive the traces produced by the application, and then process all spans to export them to jaeger.

receivers:

otlp:

protocols:

grpc:

# Dummy receiver that's never used, because a pipeline is required to have one.

otlp/spanmetrics:

protocols:

grpc:

endpoint: "localhost:65535"

processors:

batch:

spanmetrics:

metrics_exporter: prometheus

exporters:

otlp:

endpoint: jaeger:4317

tls:

insecure: true

jaeger:

endpoint: "jaeger:4317"

tls:

insecure: true

prometheus:

endpoint: "0.0.0.0:8989"

service:

pipelines:

traces:

receivers: [otlp]

processors: [spanmetrics, batch]

exporters: [otlp]

metrics/spanmetrics:

receivers: [otlp/spanmetrics]

exporters: [prometheus]

Since we have all the spans passing through the collector, we can leverage a processor called spanmetrics that exposes metrics about the number of calls and also latency for every operation using the Prometheus standard.

This approach helps us to generate metrics based on spans and have two different telemetry data out of the box.

Docker-Compose file

Now we have the OpenTelemetry Collector configuration, we can spin up Jaeger and Prometheus, using the following configuration

version: '3.8'

services:

prometheus:

image: prom/prometheus:latest

container_name: prometheus

restart: unless-stopped

volumes:

- ./conf/prometheus/prometheus.yml:/etc/prometheus/prometheus.yml

command:

- '--config.file=/etc/prometheus/prometheus.yml'

- '--storage.tsdb.path=/prometheus'

- '--web.console.libraries=/etc/prometheus/console_libraries'

- '--web.console.templates=/etc/prometheus/consoles'

- '--web.enable-lifecycle'

ports:

- 9090:9090

jaeger:

image: jaegertracing/all-in-one:1.39

restart: unless-stopped

command:

- --collector.otlp.enabled=true

ports:

- "16686:16686"

environment:

- METRICS_STORAGE_TYPE=prometheus

- PROMETHEUS_SERVER_URL=http://prometheus:9090

depends_on:

- prometheus

otel:

image: otel/opentelemetry-collector-contrib

command:

- --config=/etc/otel-collector-config.yaml

volumes:

- ./conf/otel-collector-config.yaml:/etc/otel-collector-config.yaml

depends_on:

- jaeger

server:

build:

context: .

dockerfile: ./app/Dockerfile

environment:

- OTEL_TRACES_EXPORTER=otlp

- OTEL_SERVICE_NAME=server

- OTEL_EXPORTER_OTLP_ENDPOINT=http://otel:4317

ports:

- 9091:9090

depends_on:

- otel

I just would like to highlight the environmental variables in the jaeger service, since we have the spanmetrics processor active on the OpenTelemetry Collector, we can leverage the SPM feature from jaeger, for more information please check this link.

The Final Result

It’s time to see the outcome; all of those configurations will support us in collecting telemetry data that will be useful for the entire company to start adopting observability without requiring engineering efforts.

HTTP Load Testing

To see properly the telemetry data, I’ll create a small load on the service using Vegeta.

echo "GET http://localhost:9091/checkouts" | vegeta attack -duration=60s | vegeta report

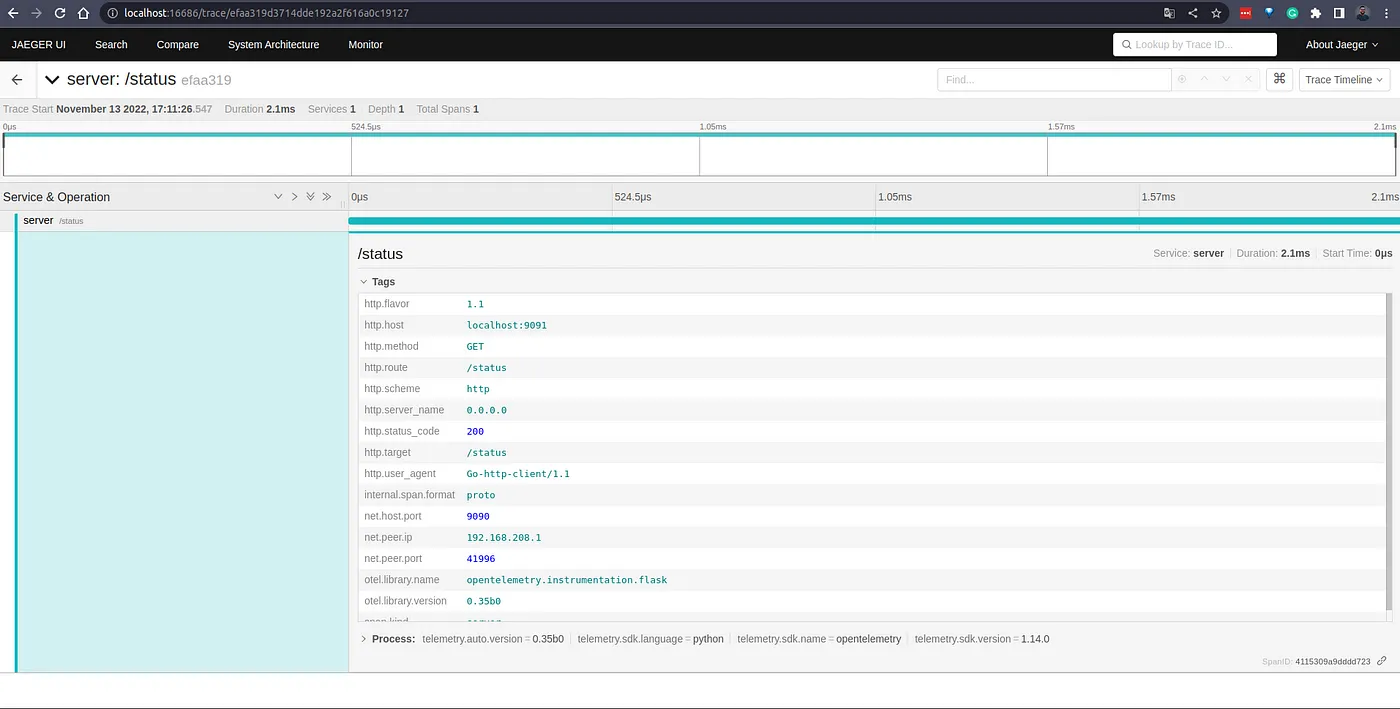

Jaeger Tracing

On the Tracing view, we can track the flow of requests across your platform, and gather useful data that will assist teams to understand performance issues as well as complex distributed architecture.

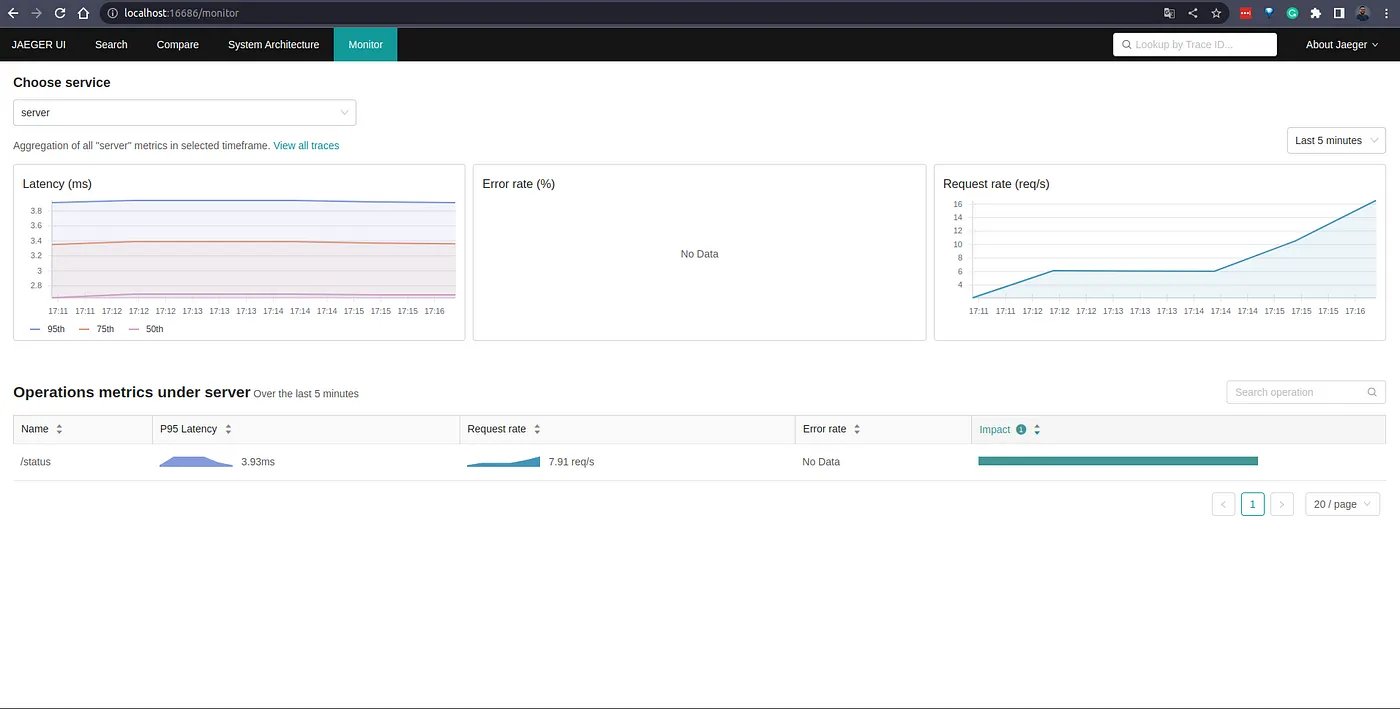

Jaeger SPM

The Jaeger Service Performance Monitor provides a service-level aggregation, as well as an operation-level aggregation within the service, of Request rates, Error rates, and Durations (P95, P75, and P50), also known as RED metrics.

This tab is filled with the information created by the span metrics processor on the Opentelemetry collector, and this is also available in Prometheus as we can see below.

Prometheus Metrics

As described above, the spanmetrics the processor creates two metrics such as calls_total and latency_bucket

Conclusion

This is a very simple example, and the main idea is to provide insights into what type of telemetry data could be collected using OpenTelemetry auto instrumentation.

The code is available on my GitHub account, feel free to look at and explore it by running it in your local environment.

Let me know if you’re leveraging this on your company to collect telemetry data or aim to use it.

Thanks 😃